A few weeks ago, we posted an article on how Humanoids are made; I am pleased to report that based on feedback there is way more interest that I would have anticipated. So... we decided to expand further the initial plans on the subject. As always, we try to reach a broad audience and not in hyper geek speak; however, if you want an extremely geek deep dive, speak up either in our Telegram chat or anywhere else you know we are listening.

If by chance you missed the first article, or a friend sent this to you via our Telegram chat, here is the direct link, and if you just want a gist below, there’s a 300 words summary of it. Thanks, CLOUD.IA!

Summary

Designing humanoid robots is a complex process that begins with concept and design. This stage is crucial as it lays the groundwork for everything that follows. The purpose and functionality of the robot must first be identified, which then guides all further design and development decisions. Factors such as the tasks the robot is designed to perform, the environment it needs to navigate, or the type of interactions it will have, all influence its design and component selection.

Following the initial design phase, a preliminary sketch or 3D model of the robot is made, outlining the rough size, shape, and location of major components like the processor and power supply. Detailed design is typically done using Computer-Aided Design (CAD) software, which allows the creation of precise 3D models. CAD, with its history dating back to the 1960s, has transformed industries such as robotics, architecture, engineering, and animation.

The robot's movement can be simulated using these CAD models to understand how it might interact with its environment. The simulations can also perform stress tests to see how the robot's materials will withstand different forces. However, when testing complex parts such as limbs and hands, simulators are often a step behind.

The exterior design of a robot often frames its intended purpose, a principle referred to as "design intent" in mechanical engineering. For example, home-assistance robots often have a monoblock figure, while industrial humanoid robots like the Tesla Optimus are designed to conceal their wires and parts to avoid increasing engineering challenges.

Finally, the article mentions that the next part of the series will explore the complexities of designing robotic hands for humanoids, highlighting the challenges faced by designers and material engineers, which is why many robots do not have fingers.

Perhaps in 50 years, sophisticated types of AGI will design and build humanoids with the ease of a single thought. However, we are not there yet, and therefore, there is still a lot to struggle with when it comes to the electromechanical design of humanoids. Perhaps one of the most complex parts of those robot-bodies hands is on top of the list.

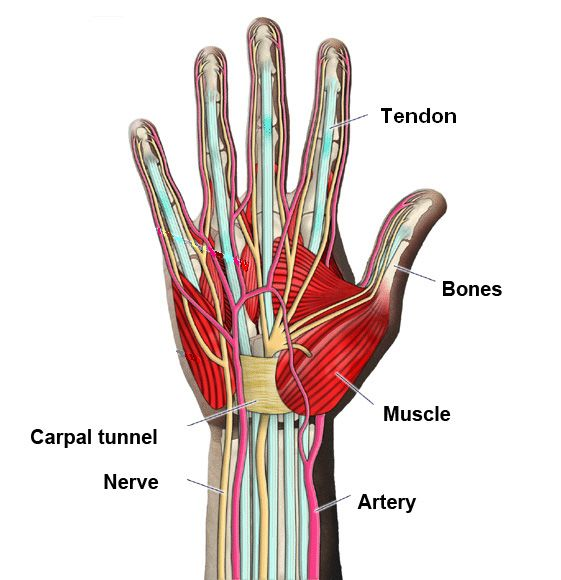

In the realm of robotics, the quest to emulate the dexterity and versatility of the human hand stands (pun!) as one of the most challenging endeavors. With its intricate web of bones, muscles, and nerves, the human hand is a marvel of evolutionary engineering, capable of a range of tasks from the delicate to the robust.

Replicating this versatility in a robotic hand is no small feat, requiring a complex interplay of design, materials, programming, and, above all, a deep understanding of the human hand itself.

The journey of creating a humanoid hand begins with the concept and design phase, the bedrock upon which all robotic creations are built. The initial design must answer key questions such as what tasks the robot is designed to perform and whether it is meant to interact with people, perform a specific job, or navigate a particular type of terrain. These factors greatly influence the design and the type of components used. For instance, a robot designed for underwater exploration would require waterproof materials and the ability to withstand pressure, while one intended for human interaction may necessitate softer, more flexible parts and a non-threatening appearance. Let's dig a bit deeper.

The Design Complexity: Mimicking Nature's Best

The first challenge that engineers face when creating humanoid hands is to replicate the complexity and precision of the human hand itself. Each of our hands has 27 bones, controlled by a network of muscles and tendons. This complex design allows for a wide range of motions and a high degree of dexterity. The problem is a fascinating blend of biological and mechanical engineering, a journey into the depths of miniaturization, precision, and control.

In the design phase, engineers make use of advanced software tools that enable them to create detailed, three-dimensional blueprints of the robotic hand. This blueprint maps out each joint, actuator, and sensor, laying the groundwork for the intricate task of assembling the robotic hand.

Material Selection: Striking a Balance

When selecting materials, engineers must balance the need for durability, flexibility, and the weight of the final product. Often, lightweight yet robust materials like aluminum and various composites are used for the internal skeleton, while the outer "skin" could be made from flexible, responsive materials like silicone.

A trend in recent years has been towards "soft robotics," utilizing more elastic materials like rubber and silicone that can be pneumatically or hydraulically actuated. Soft robotics can lead to more anthropomorphic motion and improved safety when interacting with people, as the materials are less likely to cause injury than hard metals.

Inside the Hand: Sensory Feedback and Actuation

Creating humanoid hands that can perform tasks as well as a human requires sophisticated sensor technology. This technology provides feedback about the environment, such as temperature, pressure, and texture.

Tactile sensors embedded in the fingers help the robotic hand distinguish between a soft, delicate object like a sponge and a hard, rigid object like a brick. In combination with sophisticated machine learning algorithms, this allows the hand to adapt its grip and apply the correct amount of pressure.

Actuation, or the method by which the robotic hand moves, varies between designs. Some hands use electric motors for each joint, while others use cables or hydraulic systems. Soft robotics often use pneumatic systems, where air pressure inflates compartments to cause movement.

Assembly and Calibration

Once designed, the robotic hand's components are created using methods like 3D printing, casting, or CNC machining. They are then assembled, a process which can be as complex as the design phase, requiring precise alignment of all components.

Once assembled, the hand is calibrated. Each sensor and actuator is tested and adjusted to ensure accurate functioning. The hand is then programmed with basic motions, and machine learning algorithms are used to refine these movements over time.

That is a very short synthesis of something that can be really daunting. The process of assembling a robotic hand is akin to piecing together a complex puzzle.

Each part must perfectly fit and interact with the others to form a functional whole. The various components - the skeleton, actuators, sensors, and "skin" - all require careful integration.

Firstly, the internal skeleton or structure of the hand, typically crafted from materials such as aluminum or plastic composites, is assembled. This skeleton acts as the framework of the hand and has attachments for actuators and sensors. The assembly process is highly meticulous and requires precise alignment to ensure that each joint can move freely and accurately.

Next, the actuators, which are the 'muscles' of the robotic hand, are installed. Depending on the design, these might be motors, pneumatic systems, hydraulic systems, or even shape-memory alloys that change shape when heated. Each actuator must be correctly positioned and attached to the corresponding part of the skeleton, often through a complex system of miniature cables, levers, or gears.

Simultaneously or subsequently, the sensory systems are integrated. Sensors can be embedded in various locations, but most commonly in the fingertips or 'palm' of the hand, as these are the parts that most frequently interact with the environment. These sensors can detect factors like pressure, temperature, and even texture, allowing the hand to adjust its actions according to the feedback received.

Finally, the 'skin' of the hand is added. Depending on the design, this could be a hard shell or a soft, flexible covering that mimics the texture and elasticity of human skin. Not only does this outer layer protect the internal components, but it can also house additional sensors and contribute to the hand's overall aesthetic and anthropomorphic qualities.

Calibration: The Fine-Tuning Process

Once assembled, the robotic hand must undergo a crucial process known as calibration. This is the fine-tuning that ensures the hand's movements are accurate, its sensors correctly interpret input, and its various components function harmoniously.

As pointed out in the previous article, Agility Robotics is working in creating a work helper humanoid hybrid. If you haven't seen their latest progress, check the video below. Notice the squeeze of the empty box when grip forces are applied.

For the first time, Digit acts as the bridge between conversation and real-world action. 🔊#LLM #PhysicalIntelligence pic.twitter.com/h7wFarvTnw

— Agility Robotics (@agilityrobotics) May 30, 2023

That is the tip of a gigantic iceberg when it comes to design humanoid hands. You can grab an egg to move from location to the other on a countertop because your brain knows exactly how to communicate with your tactile perception and therefore apply the right pressure. Frittata on counters tends to be not very welcomed, generally speaking 🙈

Actuators in the words of a comedian

Alright, so you're asking about an actuator, huh? You know, it's not every day someone comes to a comedian for a technical explanation. But hey, I'll give it a whirl!

So, what's an actuator, you might ask? It's like the muscleman at the circus, flexing its strongman muscles to move things around. But instead of showing off to impress the crowd, this 'Arnold Schwarzenegger' of the machine world is working hard behind the scenes, turning electrical, pneumatic, or hydraulic energy into motion. It's got one job: to move things. Linear, rotational, the hokey pokey, you name it!

Now, let's take a trip down memory lane. Back in the olden days, before Netflix and chill, we had our first taste of actuators with things like water wheels. Those were the great-great-great-grandparents of our modern actuators, flexing their muscles to grind wheat or pump water, all thanks to our buddy, H2O.

Fast forward to the steamy era of the Industrial Revolution, and we've got the steam engine. These beefy boys were the Arnold Schwarzenegger of their time, puffing away and turning that hot steam into useful work. Trains, factories, all were hustling and bustling thanks to these steam-powered hulks.

Then along came electricity. Now we're talking! Electric actuators were like the ninjas of the machine world. They were quieter, cleaner, and more efficient than their steam and pneumatic predecessors. They didn't need a sauna to get things moving; just a zap of electric juice was all it took!

And then, as we entered the age of robotics, we developed servo actuators. They're like the prima ballerinas of the actuator world. Not just about brute strength, these graceful gizmos have sensors for precision. They can tiptoe across the stage or perform a grand jeté with the utmost accuracy. They're the stars of the show in our high-tech world, making robotic hands move and groove just like us.

And here we are today, with actuators doing everything from making your car windows go up and down, to helping robots explore Mars. Who knows what's next? Maybe an actuator that can mix a perfect cocktail or perform stand-up comedy! Now there's an actuator I'd like to meet!

The calibration of actuators involves ensuring that they produce the correct amount of force and move accurately. Each joint's range of motion is tested and adjusted to match the intended design. This process is crucial to ensure that the hand can move smoothly and perform tasks effectively. If an actuator is too weak or strong, or if it moves too far or not far enough, the hand's functionality could be compromised.

Sensors too must be calibrated. For example, a pressure sensor might be tested against a range of known pressures to ensure it can correctly distinguish between a light touch and a firm grip. A temperature sensor might be exposed to different temperatures to verify its accuracy. Any discrepancies identified during this testing phase would necessitate adjustments in the sensor's sensitivity or the algorithms that interpret its data.

Finally, the overall functionality of the hand is assessed. The hand's performance is evaluated through a series of tasks designed to test its range of motion, dexterity, responsiveness, and the interplay between its sensory feedback and actuation. These tasks could range from picking up small objects or delicate manipulation tasks to more force-demanding activities like gripping and lifting heavier items.

Machine learning algorithms are often employed to improve the hand's performance over time. These algorithms allow the hand to 'learn' from each task it performs, continually refining its movements and responses to increase precision, efficiency, and overall functionality. In the upcoming series of Tesla Bot we are going to explore the details of machine learning at play with humanoids. Those engineering marvels of biomechanics are useless without the proper amout of self learning brain.

Practical Use Cases: The Future is Here

Robotic hands have a broad range of practical uses. In industry, they can perform tasks that are dangerous for humans, such as handling hazardous materials or operating in extreme temperatures. They are also used in delicate tasks where human error can be costly, such as in microsurgery.

In prosthetics, advanced humanoid hands have provided amputees with a level of function that was unimaginable a few decades ago. These hands are often controlled by the user's remaining muscles and provide sensory feedback, allowing for a more natural and intuitive use.

In space exploration, humanoid hands are used on robotic astronauts, or "robonauts." NASA's Robonaut 2, for instance, has hands delicate enough to use the same tools that human astronauts use, yet strong enough to lift heavy payloads. These robonauts can perform extravehicular activities that might be risky for their human counterparts.

In social robotics, humanoid hands play a crucial role in creating robots that can interact with humans in more natural and intuitive ways. These robots could be used in a range of roles, from companions for the elderly to teachers for children.

And let's not forget the field of disaster response where humanoid robots can navigate through debris, turn valves, or operate machinery in situations far too dangerous for humans. The Fukushima Daiichi nuclear disaster in 2011 underlined the potential of robots in such scenarios, as robots were used to go where humans could not due to radiation.

Challenges and Future Outlook

Despite these advancements, replicating the full range of human hand abilities remains a challenge. The human hand is not just an amazing machine; it is also a sensory organ, capable of feeling minute temperature changes, identifying texture variations, and detecting slight pressure differences.

Moreover, the ease with which humans can perform complex manipulations - like tying shoelaces or playing a musical instrument - are far beyond current robotic capabilities. Achieving such dexterity requires not just better mechanical design and sensory feedback, but also more advanced AI and machine learning algorithms.

The burgeoning field of neuromorphic engineering, which seeks to mimic the human brain's neural architecture, offers promising solutions to these challenges. By developing systems that process and react to information similarly to the human brain, we could create robotic hands that not only mimic the physical functions of human hands but also replicate our touch sensitivity and adaptability.

Neuromorphic

Neuromorphic engineering, or neuromorphic computing, is a concept developed by Carver Mead, in the late 1980s, as a way to create circuits that mimic the nervous system's neuro-biological architectures. The idea is to design artificial neural systems whose physical architecture and design principles are based on the human brain.

Traditional computing architecture, like the ones in our desktops, laptops, and even supercomputers, is based on the Von Neumann architecture where data and instructions are sent back and forth between the processor and memory. This results in a bottleneck for data transfer, often called the Von Neumann bottleneck.

On the other hand, neuromorphic systems aim to emulate the brain's architecture, where processing and memory are not separated but are co-located in neurons, drastically reducing data transfer latency. This "computational locality" is one of the main advantages of neuromorphic systems.

At the heart of these systems are artificial neurons and synapses. Just as biological neurons transmit electrical signals to each other via synapses, artificial neurons in neuromorphic systems use electric pulses to communicate. The strength of these connections, or synaptic weights, can be adjusted, mimicking the learning process in the human brain.

Neuromorphic systems hold promise in various domains, including machine learning, artificial intelligence, and robotics, where they can perform tasks like pattern recognition, decision-making, and motor control more efficiently than traditional architectures. They are also power-efficient, making them a promising avenue for wearable tech and mobile devices.

However, despite the potential, building practical neuromorphic systems is still a challenge. While there has been significant progress in developing artificial neurons and synapses, creating large-scale neuromorphic systems is no easy feat. One major hurdle is our limited understanding of the brain's complexities, which makes mimicking it accurately a daunting task.

As of my last update in September 2021, researchers around the world continue to explore and advance neuromorphic technologies, driving us closer to creating machines that may one day think and learn like us.

The dream of creating a truly humanoid hand is closer than ever, but it remains a dream. The journey ahead is exciting and full of possibilities, promising to revolutionize industry, medicine, and our daily lives. The art and science of crafting humanoid hands continue to intertwine, forging ahead on the path of innovation, discovery, and a deeper understanding of our own remarkable biology.

Beyond the Human Hand: Pioneering New Possibilities

Interestingly, while our fascination with recreating the human hand in its perfect form persists, some researchers are already looking beyond it. Why limit ourselves to the human hand's five-fingered configuration when we can pioneer new possibilities? This idea has spawned a whole new area of exploration within the field.

Imagine a robotic hand that could telescope to reach distant objects or shrink to manipulate minute components. Consider a hand that could change its hardness or temperature at will, or one that had a hundred fingers, each capable of acting independently. This is the realm of the 'superhuman' hand, and while it may seem like science fiction today, researchers are seriously investigating these possibilities.

In fact, some companies have already begun to commercialize robotic arms with 'extra' capabilities. One example is a robotic arm capable of changing its stiffness depending on the task at hand, allowing it to work with both fragile and heavy objects.

The Intersection of Ethics and Robotics

As we march forward in our quest to create humanoid hands, it's also essential to consider the ethical implications. The realm of humanoid robotics is rife with questions about human-robot interaction, privacy, security, and the potential displacement of jobs.

From a medical perspective, as prosthetic devices become more advanced, we are moving into a realm where they could potentially offer enhanced capabilities, above and beyond the human norm. This presents complex ethical questions about access, cost, and even the definition of what it means to be human.

Moreover, as robots become more prevalent in our society, questions about the implications for privacy and security arise. How do we ensure that robots in our homes and workplaces respect our privacy? How do we secure these robots against hacking or malicious use?

And as robots become more capable, there is the question of job displacement. Many of the tasks currently performed by humans in industries such as manufacturing, retail, and food service could potentially be automated. While this could lead to increased productivity and economic growth, it could also lead to job losses in these sectors.

The challenge for society will be to navigate these ethical dilemmas, and to ensure that the benefits of this technology are widely distributed. It's a conversation that needs to involve not just engineers and scientists, but policymakers, ethicists, and society as a whole.

Conclusion

The story of how humanoid hands are made is not just about wires, motors, and silicone. It's a narrative about our ceaseless pursuit to understand and recreate ourselves, about our age-old fascination with replicating human capabilities in metal and plastic. It's a journey that is replete with challenges and marked by significant milestones.

The road to creating the perfect humanoid hand is long and winding, but as each day passes, we're getting closer to this goal. In the process, we're not only redefining what's possible in the realm of robotics, but we're also reshaping our understanding of what it means to be human. For in striving to recreate our own image, we invariably learn more about ourselves. And that, perhaps, is the greatest marvel of all.

👋🏼 Remember, behind every great robot, there's an even greater human!